Cache-Aside Pattern in ASP.NET Core – Improve Performance the Smart Way

Cache-Aside Pattern in ASP.NET Core is a proven approach to improve performance and scalability, especially when the same data is queried frequently from the database. Instead of repeatedly hitting the data source, this pattern helps efficiently serve data by caching it when needed..

In this article, we’ll break down the cache-aside pattern, explain why it matters, and show you how to implement it in an ASP.NET Core application using IMemoryCache and distributed cache like Redis.

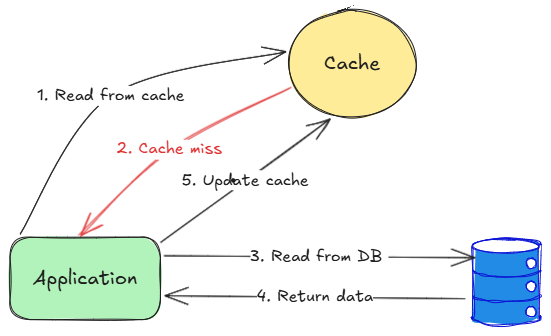

What is the Cache-Aside Pattern?

The Cache-Aside (also known as Lazy Loading) pattern is a caching strategy where the application code is responsible for:

- First checking the cache.

- If the data isn’t available (a cache miss), retrieving it from the data source (like a database).

- Then storing that data in the cache for next time.

So the cache is populated on-demand rather than being loaded upfront.

Benefit: You reduce database hits and improve response times for frequently requested data.

When Should You Use Cache-Aside Pattern?

Use this pattern when:

- Data is read frequently but doesn’t change too often.

- You want to avoid unnecessary calls to the database.

- You’re working with large datasets where preloading everything in cache is inefficient.

- Your cache doesn’t support read-through/write-through mechanisms.

Avoid it if:

- Your data is static and fits entirely in the cache. In that case, you might just preload the cache during startup.

- You’re caching session state in web applications running across multiple servers without sticky sessions or distributed cache.

Implementing Cache-Aside Pattern in ASP.NET Core

Let’s walk through a simple implementation using ASP.NET Core with IMemoryCache.

1. Add Required Services

In Program.cs:

builder.Services.AddMemoryCache();If you’re using Redis:

Add nuget Microsoft.Extensions.Caching.StackExchangeRedis package

dotnet add package Microsoft.Extensions.Caching.StackExchangeRedisbuilder.Services.AddStackExchangeRedisCache(options =>

{

options.Configuration = builder.Configuration.GetConnectionString("Redis");

});2. Sample Customer Entity and Service

In Customer.cs

public sealed class Customer

{

public int Id { get; set; }

public string Name { get; set; } = string.Empty;

}In ICustomerRepository.cs

public interface ICustomerRepository

{

Task<Customer?> GetByIdAsync(int id, CancellationToken cancellationToken);

Task UpdateAsync(Customer customer, CancellationToken cancellationToken);

}In CustomerRepository.cs

public class CustomerRepository : ICustomerRepository

{

private static readonly Dictionary<int, Customer> _customers = new()

{

{ 1, new Customer { Id = 1, Name = "John Doe" } },

{ 2, new Customer { Id = 2, Name = "Jane Smith" } }

};

public Task<Customer?> GetByIdAsync(int id, CancellationToken cancellationToken)

{

_customers.TryGetValue(id, out var customer);

return Task.FromResult(customer);

}

}3. Using Cache-Aside Pattern in Service

In CustomerService.cs

public sealed class CustomerService(

ICustomerRepository repository,

IMemoryCache cache)

{

private static readonly TimeSpan CacheExpiration = TimeSpan.FromMinutes(5);

public async Task<Customer?> GetByIdAsync(

int id,

CancellationToken cancellationToken)

{

var cacheKey = $"customer:{id}";

if (cache.TryGetValue(cacheKey, out Customer? cachedCustomer))

{

return cachedCustomer;

}

var customer = await repository.GetByIdAsync(id, cancellationToken);

if (customer is not null)

{

cache.Set(cacheKey, customer, CacheExpiration);

}

return customer;

}

}4. API Endpoint Example

app.MapGet("/customers/{id:int}", async (

int id,

CustomerService service,

CancellationToken cancellationToken) =>

{

var customer = await service.GetByIdAsync(id, cancellationToken);

return customer is not null

? Results.Ok(customer)

: Results.NotFound();

});Handling Updates and Deletes

When the underlying data changes, you must update or remove the corresponding cache entry to avoid stale reads:

public async Task UpdateAsync(

Customer customer,

CancellationToken cancellationToken)

{

await repository.UpdateAsync(customer, cancellationToken);

var cacheKey = $"customer:{customer.Id}";

cache.Remove(cacheKey); // Invalidate cache

}Advanced Considerations

- Lifetime of Cached Data: Choose expiration policies wisely. If the cache duration is too short, your app will constantly go to the database. If it’s too long, users might get stale data. Try to align cache lifetimes with how often data is updated in your system.

- Cache Eviction: Most caches have limited memory. Items are evicted based on strategies like Least Recently Used (LRU). If certain data is expensive to retrieve, consider assigning a longer expiration or higher priority—even if it’s accessed less frequently.

- Priming the Cache: Sometimes it’s helpful to preload commonly used data into the cache during application startup. This is useful for static reference data or dropdown values that rarely change. Even in cache-aside, preloading can speed up first-time usage.

- Consistency and Staleness: Cache-aside doesn’t ensure real-time consistency. If another service updates your database directly, your app might serve outdated data until the next cache miss occurs and it reloads the latest info. This is an acceptable trade-off in most read-heavy scenarios, but not for critical real-time systems.

In-Memory vs Distributed Cache

IMemoryCacheis great for single-server apps, but each server has its own memory space. That means users on one server might see different cached data than users on another.- In web farms or cloud-native apps, prefer distributed caching (e.g., Redis or SQL-based cache) to maintain consistency across instances.

Distributed Cache with Redis Example

The same logic applies when using IDistributedCache, with serialization:

public sealed class DistributedCustomerService(

IDistributedCache cache,

ICustomerRepository repository)

{

public async Task<Customer?> GetCustomerByIdAsync(

int id,

CancellationToken cancellationToken)

{

var cacheKey = $"customer:{id}";

var cachedData = await cache.GetStringAsync(cacheKey);

if (!string.IsNullOrEmpty(cachedData))

{

return JsonSerializer.Deserialize<Customer>(cachedData);

}

var customer = await repository.GetByIdAsync(id, cancellationToken);

if (customer is not null)

{

await cache.SetStringAsync(

cacheKey,

JsonSerializer.Serialize(customer),

new DistributedCacheEntryOptions

{

AbsoluteExpirationRelativeToNow = TimeSpan.FromMinutes(5)

});

}

return customer;

}

}Summary

The Cache-Aside pattern gives you full control over when and how data is cached. It works well for read-heavy workloads where data doesn’t change constantly.

Takeaways

The Cache-Aside pattern helps reduce the load on your database by caching data only when it’s actually needed. Instead of preloading everything, the cache gets populated on-demand, making it a great fit for scenarios where data access patterns are unpredictable. While it improves performance, it’s important to be mindful of expiration settings and eviction strategies—setting them incorrectly can lead to frequent cache misses or stale data. Additionally, if your application is running across multiple instances (like in a cloud or web farm setup), it’s recommended to use a distributed cache such as Redis to avoid inconsistencies that can occur with in-memory caches.